With Meta encouraging its users to try out its generative AI image creation tools at every turn, it’s also now looking to integrate more transparency into the usage of AI images, in order to help users ascertain what’s real and what’s not.

Well, sort of.

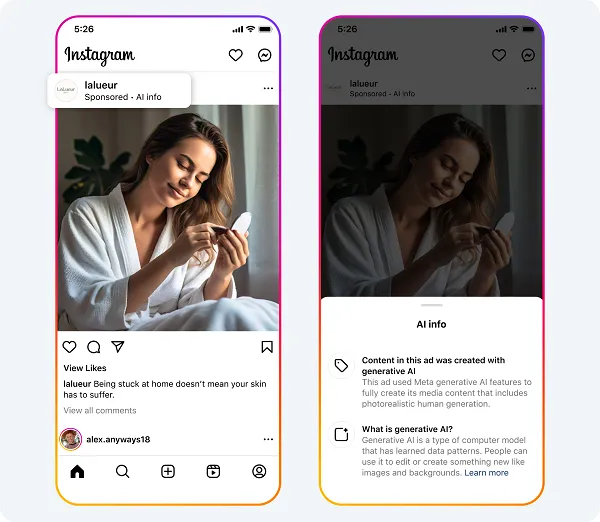

Today, Meta has outlined the latest improvements to its AI usage disclosures in ads which have used Meta’s in-house AI tools in their creation.

Meta began rolling out these labels last year, and it’s continued to refine and update the wording of these tags to improve transparency.

As per Meta:

“Our labels are designed to help people understand when images and videos have been created or significantly edited with our in-house generative AI tools. When an image or video is created or significantly edited with our generative AI creative features in our advertiser marketing tools, a label will appear in the three-dot menu or next to the “Sponsored” label.”

As noted, Meta’s working to improve these labels over time, with its current approach only applying to ads that have been “significantly” edited using its own AI tools.

“If an advertiser is using our in-house generative AI creative features and these tools do not result in significant edits to the image or video and do not include a photorealistic human, then we will not apply any AI labels.”

Meta doesn’t define what qualifies as “significant” in this respect, but the process adds an extra level of transparency to Meta-originated AI works.

Well, ads at least. Meta’s rules here don’t apply in the same way to regular posts, which have become a source of confusion for many Facebook users.

As you can see, posts like this are generating big engagement in the app, with many users seemingly unaware, or unsuspecting of AI creation tools.

Though many of these are also not created via Meta’s own AI generators, which do come with a custom watermark.

And Meta says that it’s working on a solution for images created in other AI apps as well:

“Providing transparency around our home-grown generative AI tools is a first step on our ads generative AI transparency journey. This year, we also plan to share more information on our approach to labeling ad images made or edited with non-Meta generative AI tools. We will continue to evolve our approach to labeling AI-generated content in partnership with experts, advertisers, policy stakeholders and industry partners as people’s expectations and the technology change.”

That could be a big step in dispelling misinformation, and ensuring transparency with such images.

Of course, Meta’s specifically talking about paid promotions here, and it’s not clear that the same qualifiers will be applied to regular posts. But hopefully, Meta’s exploring solutions that will ensure greater transparency across all post types, where possible.

Generative AI tools are creating a lot of confusion, though not necessarily in the ways that many expected. The big concern, heading into last year’s election cycle at least, was that AI deepfakes would be weaponized by political parties to sway voter opinions.

And that has happened, but not to the degree that many anticipated, with most of the AI misrepresentation coming via trashy, like-bait images like the example above on FB, with users fishing for engagement, with a view, potentially, to onselling their Facebook Pages at a later stage.

That’s less harmful, but it’s still misleading, and it still degrades the broader Facebook experience.

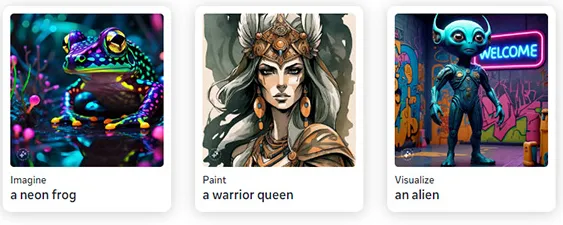

Yet, at the same time, Meta continues to double down on its own AI creation tools, with prompts like this in your main feed.

Why anyone would want to create images like this, I don’t know, but Meta seems determined to get more people using its Meta AI chatbot, as it seeks to make it the most used AI chatbot tool in the world.

Yet, at the same time, it’s trying to provide more explainers on the legitimacy of images, because unlabeled AI pictures are misleading.

And really, Meta could probably reduce the impact of such by not boosting these in-feed prompts at all, but the need to be the best seemingly outweighs the concern in this respect.

Which is one of the most confusing aspects of the AI push, that people have complained for years about the impact of bots in social apps, and how they negatively impact the human experience. Yet, now Meta wants to add more and more bots, and more fake content into the mix.

It seems confusing, and more a showcase of what can be done, as opposed to what’s valuable. But as long as AI is the tech trend of the moment, that, I guess, will remain Meta’s focus as well.

English (US) ·

English (US) ·