AI models that understand videos as well as text can unlock powerful new applications. At least, that’s what Jae Lee, the co-founder of Twelve Labs, believes.

Granted, Lee’s a little biased. Twelve Labs trains video-analyzing models for a range of use cases. But there may just be something to his assertion.

Using Twelve Labs’ models, users can search through videos for specific moments, summarize clips, or ask questions like “When did the person in the red shirt enter the restaurant?” It’s a powerful set of capabilities — which is perhaps why the company has attracted big-name backers including Nvidia, Samsung, and Intel.

Video search

To Lee, a data scientist by training, basic search never made sense for video. Keyword searches can pull up titles, tags, and descriptions, but can’t get at the actual content of clips.

“Video is the fastest-growing — and most data-intensive — medium, yet most organizations aren’t going to devote human resources to cull through all their video archives,” Lee told TechCrunch. “Even if you tried manually tagging, it wouldn’t solve the issue. Finding a specific moment or angle in videos can be like looking for a needle in a haystack.”

After failing to find a better solution, Lee recruited peers Aiden Lee, SJ Kim, Dave Chung, and Soyoung Lee to build one. That was the genesis of Twelve Labs, which trains models to map text to what’s happening inside a video, including actions, objects, and background sounds.

Models like Google’s Gemini can search through footage, and Microsoft and Amazon, among others, offer video analytics services to spot objects in clips. But Lee argues that Twelve Labs’ products stand apart with their customization options, which let customers tailor models using their own data.

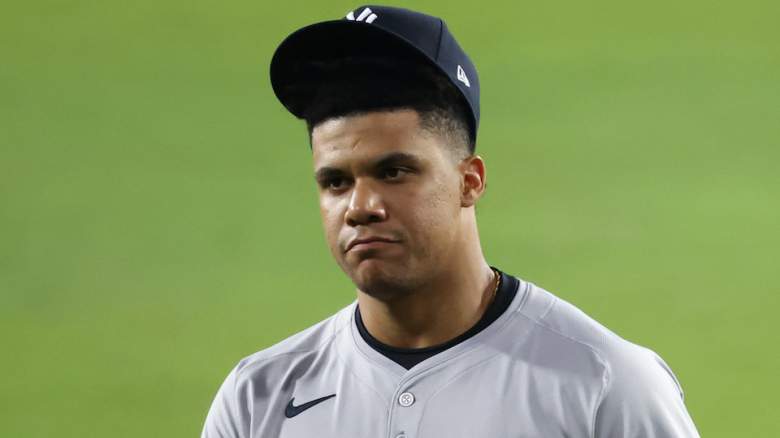

Twelve Labs co-founder and CEO Jae Lee.Image Credits:Twelve Labs

Twelve Labs co-founder and CEO Jae Lee.Image Credits:Twelve Labs“Companies like OpenAI and Google are investing heavily in general-purpose multimodal models,” Lee said, “but these models aren’t optimized for video. Our differentiation lies in being video-first from the beginning … We believe video is deserving of our sole focus — it’s not an add-on.”

Devs can create apps on top of Twelve Labs models to search across video footage and more. The company’s tech can drive things like ad insertion, content moderation, and auto-generating highlight reels from clips.

When I spoke with Lee last year, I asked about the potential for bias in Twelve Labs’ models. It’s a big risk factor. A 2021 study found that training a video understanding model on clips of local news, which tends to cover crime in a racialized way, could cause the model to learn racist patterns.

Lee said at the time that Twelve Labs was planning to release model-ethics-related benchmarks and data sets. The company still hasn’t. In our recent chat, Lee assured me that these tools are on the way and that Twelve Labs conducts bias tests on all of its models prior to releasing them.

“We haven’t released formal bias benchmarks yet because we want to ensure that they’re meaningful, practical, and actionable,” he said. “Our overall goal is to develop benchmarks that not only hold us accountable, but also set a standard in the industry … Until we’ve fully accomplished this goal — and we have a team working on this — we’re actively working to create AI that empowers organizations responsibly, respects people’s civil liberties, and drives technological change.”

Lee added that Twelve Labs trains its models on a mix of public domain and licensed data, and doesn’t source customer data for training.

Growth mode

Video analysis remains core to what Twelve Labs does. But, in an effort to stay nimble, the company is also branching into areas like “any-to-any” search and multimodal embeddings.

One of Twelve Labs’ models, Marengo, can search across images and audio in addition to video, and accept a reference audio recording, image, or video clip to help guide a search.

Elsewhere, the company offers an API, the Embed API, to create multimodal embeddings for videos, text, images, and audio files. Embeddings are mathematical representations that capture the meaning and relationships between different data points, making them useful for applications like anomaly detection.

Twelve Labs’ growing product portfolio has helped the startup secure clients in the enterprise, media, and entertainment spaces. Two major partners are Databricks and Snowflake, both of which are building Twelve Labs tooling into their offerings.

Twelve Labs builds multimodal video understanding models. Some answer questions, others perform searches — and more. Image Credits:Twelve Labs

Twelve Labs builds multimodal video understanding models. Some answer questions, others perform searches — and more. Image Credits:Twelve LabsDatabricks developed an integration that lets customer invoke Twelve Labs’ embedding service from existing data pipelines. Snowflake, meanwhile, is creating connectors to Twelve Labs models in Cortex AI, its fully managed AI service.

“We currently have 30,000-plus developers using our platform, ranging from individuals experimenting to major enterprises integrating our technology into their workflows,” Lee said. “For example, we’ve partnered with municipalities for use cases like real-time threat detection, enhancing emergency response times, and assisting in traffic management.”

As a show of strategic support, both Databricks and Snowflake invested in Twelve Labs this month through their respective venture arms. SK Telecom and Hubspot Ventures joined in, along with In-Q-Tel, an Arlington, Virginia-based nonprofit VC that invests in startups supporting U.S. intelligence capabilities.

The total new investments came to $30 million, bringing Twelve Labs’ total raised to $107.1 million. Lee says that the proceeds will be put toward product development and hiring.

“We’re in a very strong fiscal position, but saw an opportunity to deepen key strategic relationships with leaders who believe deeply in Twelve Labs,” Lee said. “We currently have 73 full-time employees, and are planning significant investments in hiring across engineering, research, and customer-facing roles.”

New hire

Speaking of hiring, Twelve Labs on Thursday announced that it’s adding a president to its C-suite: Yoon Kim, former CTO of SK Telecom and a key architect behind Apple’s Siri. Yoon will also serve as Twelve Labs’ chief strategy officer, spearheading the startup’s aggressive expansion plan.

“While it’s unusual for a company of Twelve Labs’ age and stage to hire a president, this move is a testament to the demand we’ve experienced,” Lee said, adding that Yoon will split time between Twelve Labs’ San Francisco HQ and its offices in Seoul. “Yoon is the right person to help us execute — he’ll be instrumental in driving future growth with key acquisitions, expanding our global presence, and aligning our teams toward ambitious goals.”

Lee says that the aim is to grow into new and adjacent verticals, like automotive and security, in the next few years. Considering In-Q-Tel’s involvement, security (and possibly defense work) seems like a shoe-in; Lee wouldn’t confirm outright.

“The investment from In-Q-Tel reflects the versatility and potential of our technology across many sectors, including national security,” Lee said. “We’re always open to exploring opportunities where our technology can have a positive, meaningful, and responsible impact that aligns with our ethical guidelines.”

English (US) ·

English (US) ·