Last month, Apple Intelligence produced an embarrassing hallucination about the news story that everyone was talking about. It claimed that Luigi Mangione, the suspect accused of murdering UnitedHealthcare CEO Brian Thompson, had killed himself.

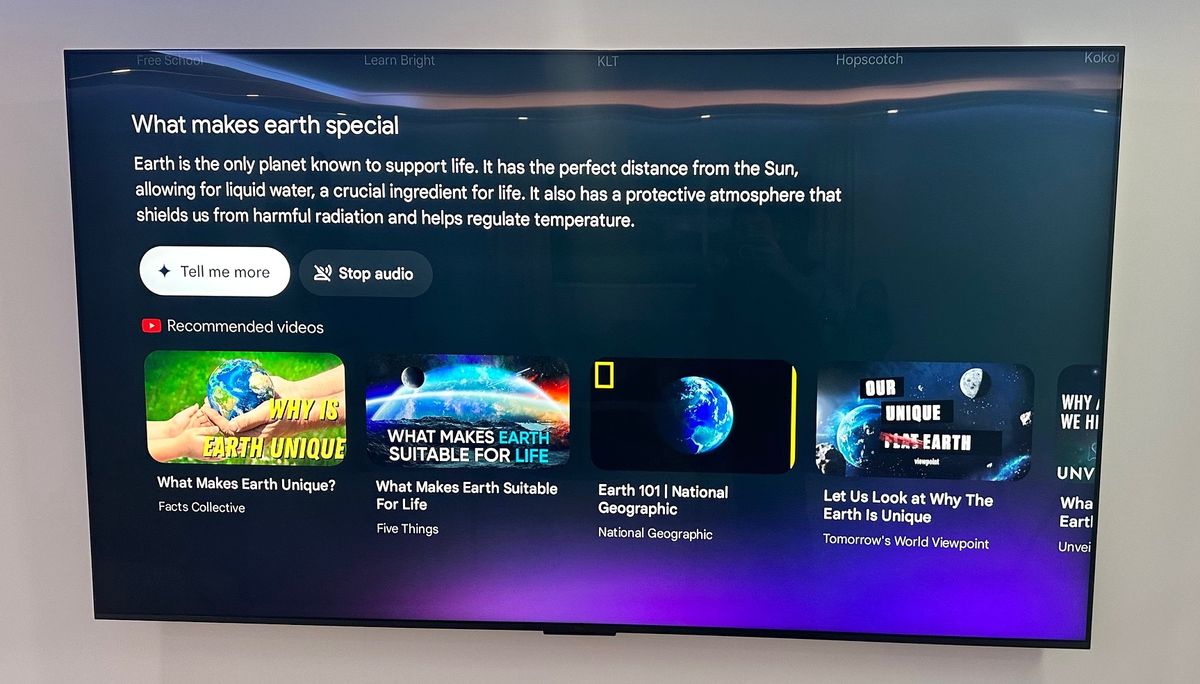

This week, Apple Intelligence produced two more embarrassing errors for BBC app users. The first claimed that darts player Luke Littler had won the PDC World Championship when he had only progressed to the final, while the second managed to fit two errors in just nine words: “Brazilian tennis player, Rafael Nadal, comes out as gay.”

Rafael Nadal — who is Spanish — hadn’t come out. The linked feature was actually about the Brazilian player Joao Lucas Reis da Silva and how he accidentally became an LGBT trailblazer by posting a birthday message to his boyfriend on Instagram. Weirdly, neither the words “Rafael” nor “Nadal” feature at all in the piece, making this hallucination all the more mysterious.

A BBC spokesperson was understandably irked by this. “It is essential that Apple fixes this problem urgently - as this has happened multiple times,” a statement on the story reads.

“As the most trusted news media organisation in the world, it is crucial that audiences can trust any information or journalism published in our name and that includes notifications.”

Apple is yet to comment about the latest BBC report, though the article notes that last time around the company opted to say nothing. Elsewhere, AppleInsider notes that Tim Cook previously stated that while Apple Intellgence's results would be of "very high quality", it would be "short of 100%".

We don't think he believes that short of 100% is acceptable for news headlines, which is why this needs to stop.

Shining a bright light on AI’s deficiencies

If there’s a positive to this story, it’s this: these mistakes are obvious, easily disproved and likely seen by multiple people.

The trouble with other AI hallucinations is that they’ll only ever be seen by one person at a time, as they’re generated on the fly to match a person’s very specific request.

Take Google search, for example. Estimates say there are "over 8.5 billion searches a day," and with Google now using an AI Overview to summarize a (sometimes dangerous) answer, plenty of these will be generating nonsense in the name of saving users a click.

It’s impossible to know how many people will blindly accept hallucinations as fact, and in most cases, inaccuracies will be benign. Nonetheless, it’s sad that the internet — once seen as a great educational equalizer — could be inadvertently making users ill-informed in the name of convenience.

With Apple Intelligence pushing these summaries to millions of users at the same time, it’s a bit different. While the BBC notes that the texts will be unique because “different combinations of notifications are summarised” it’s likely that multiple people will see similar hallucinations, and that makes the falsehoods easier to debunk.

That’s especially true when, unlike search summaries, notifications are designed to encourage clickthrough. If a user taps on the notification, they’ll quickly learn that Luigi Mangione is alive, Rafael Nadal hasn’t come out, and Luke Littler hasn’t won the PDC World Championship yet (he actually did go on to win on Friday, as it happens).

Hopefully these early mistakes will make people a bit more skeptical of the wisdom of accepting AI shortcuts. If so, oddly, these high-profile embarrassments for Apple might be doing the world a big favor.

What Apple needs to do

The fact that the hallucinations have so far been relatively minor shouldn't be of great comfort to anyone.

No tragedies are going to occur because someone erroneously thought a darts player won a tournament early, but not all mistakes are equal. Should Apple Intelligence have a hallucination over something significant — a nuclear attack or a terrorist incident, say — then the potential for mass panic is massive.

The more false headlines it spews, the more Apple wipes away goodwill it had with the Apple Intelligence launch. It would be wise for the company to put the feature on indefinite pause until more safeguards can be put in place — both for its own reputation, and to avoid more serious mishaps.

)

English (US) ·

English (US) ·