Visual Intelligence was one of the big new features announced as part of the iPhone 16 series launch, but users up until now won't have been able to access it. But with the launch of iOS 18.2 developer beta, any users signed up to Apple Developer can try it out, which is exactly what I've been doing.

Apple touts Visual Intelligence as a way to give you a helping hand with whatever you want to know about immediately in front of you, via your phone's camera. With a press-and-hold of the Camera Control, you can quickly access Visual Intelligence even with your iPhone 16 locked and get some answers. It's basically the Apple take on Google Lens or Circle to Search, except with it being limited to the iPhone 16 series, it's more exclusive.

There are a lot of potential uses for Visual Intelligence, but for this first impressions I tried out as many as I could get to work. Not everything Apple's promised seems to be ready yet, but it's more than enough to get a flavor of what it's trying to do.

Visual Intelligence: The features

Opening up Visual Intelligence with Camera Control is super simple, and comes with a fun rainbow-colored flash to indicate it's active. You can then use the Camera Control to take a snap (as well as with an on-screen shutter button), or zoom in up to 3x. This is digital zoom though, which can make for fuzzy shots that are harder for Visual Intelligence to read. Hopefully Apple will add support for telephoto camera zoom on compatible iPhones in the future.

Once you have an image captured, you can then in theory learn all sorts of things, like details about a location, or reviews and opening hours for a shop or eatery. While I could do this via the search function, I couldn't get these to pop up immediately like Apple showed off in its demo clips, even with the feature capable of detecting my location.

Perhaps it's because I'm using this in the U.K., which is a step behind the U.S. in Apple Intelligence's rollout, or just because this ability will appear in a later beta. At least for now you can just use the Google search function to get effectively the same information.

If there's enough text to read in the image, Visual Intelligence can generate a quick summary for you, similar to what you can do with your notifications or writing in the Notes app, or read it aloud if you can't or don't want to read it at that moment. This works well for a bottom line-style overview of a message, but it is limited by what you can fit into the viewfinder. Signs, posters and documents out in the real world aren't always designed to fit well on an phone-shaped display.

Any text detected by Visual Intelligence can be copied and pasted elsewhere, for example if you want to make a note of something you've passed by to then look up later. It's an ability you've been able to do with images on your iPhone since Live Text debuted in iOS 15, but having it included here too is a wise choice.

It's the same story with Visual Intelligence's ability to scan QR codes. Again, this is something your iPhone's regular camera can do, but there's nothing wrong with having it available in Visual Intelligence mode too.

You can also translate the text if it's written in a different language to the one you're using. Which is why I now know what the name of the nearby Italian restaurant to the TG office literally means.

To me, the most impressive thing Visual Intelligence can do right now is grab phone numbers, emails and dates from an image, and suggest further actions. You can immediately send a message to these numbers or emails, add them to your Contacts, or make a calendar event with just a couple of taps, just from a single shot.

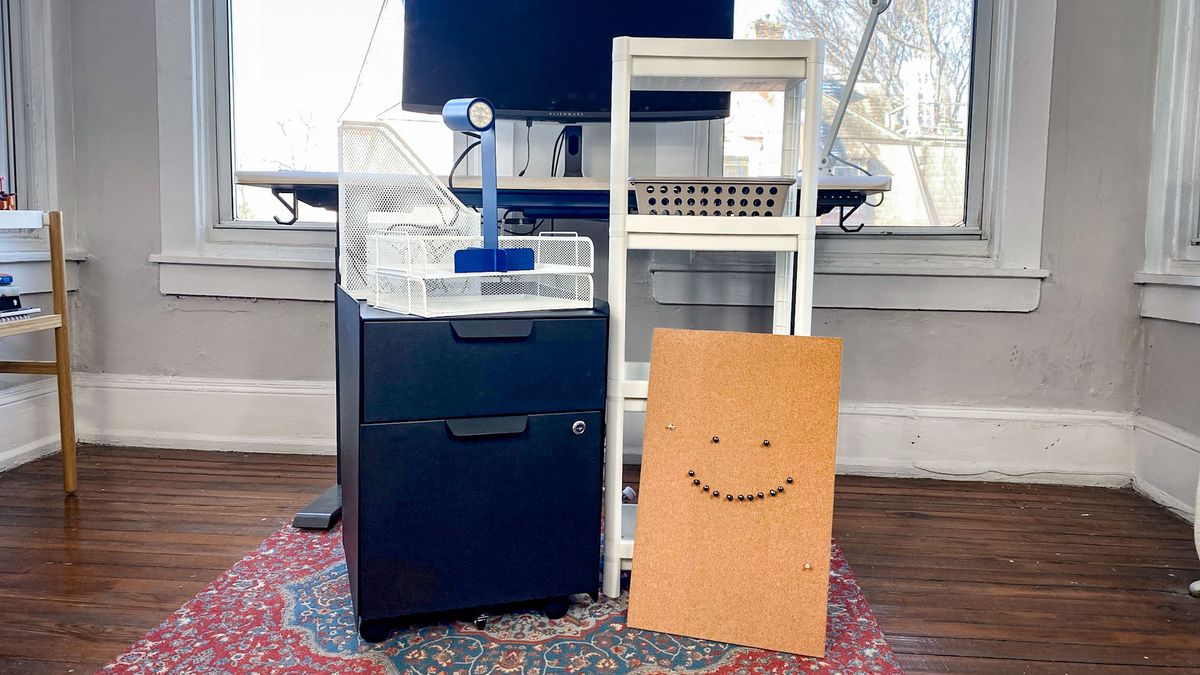

Going beyond the on-device features, Visual Intelligence lets you start an image search for an item on Google, which worked perfectly well for me each time I used it. It correctly identified my mug, for example, and offered up some relevant websites to learn more or buy another.

Perhaps the ability with the most potential is the option to explore a Visual Intelligence image further via ChatGPT integration, something also added in iOS 18.2 beta. However, I kept getting an error message saying ChatGPT couldn't use screenshots from Visual Intelligence. Plus, I kept getting errors when trying to log into my OpenAI account to see if that would fix the problem. That's the beta lifestyle folks, so we'll have to revisit the ChatGPT-powered parts of Visual Intelligence at a later date.

Visual Intelligence: Outlook

Visual Intelligence has me impressed already, even if there is clearly more work to be done at this point. It feels like the equal and opposite to Circle to Search, which is designed to offer similar information and services, except for what's on your screen.

Circle to Search can work similarly to Visual Intelligence if you activate it while your camera's open and looking at what you want to search. But Visual Intelligence has been designed to work much more smoothly with iOS and its associated apps. It's just a pity it's limited to only the latest iPhones.

There's still plenty of time to go until Visual Intelligence arrives on regular users' iPhone 16 devices, and hopefully the only way is up for this feature as future betas refine it. While we love the iPhone 16 series already, the addition of Visual Intelligence will finally give it something completely fresh over previous iPhones — and give potential upgraders the reason they need to upgrade.

More from Tom's Guide

- iPhone SE 4 production tipped to begin this December — what this means for you

- Apple reportedly working on entry-level iPad 11

- Apple M4 Mac launch date just leaked — here’s when to expect the announcement

English (US) ·

English (US) ·