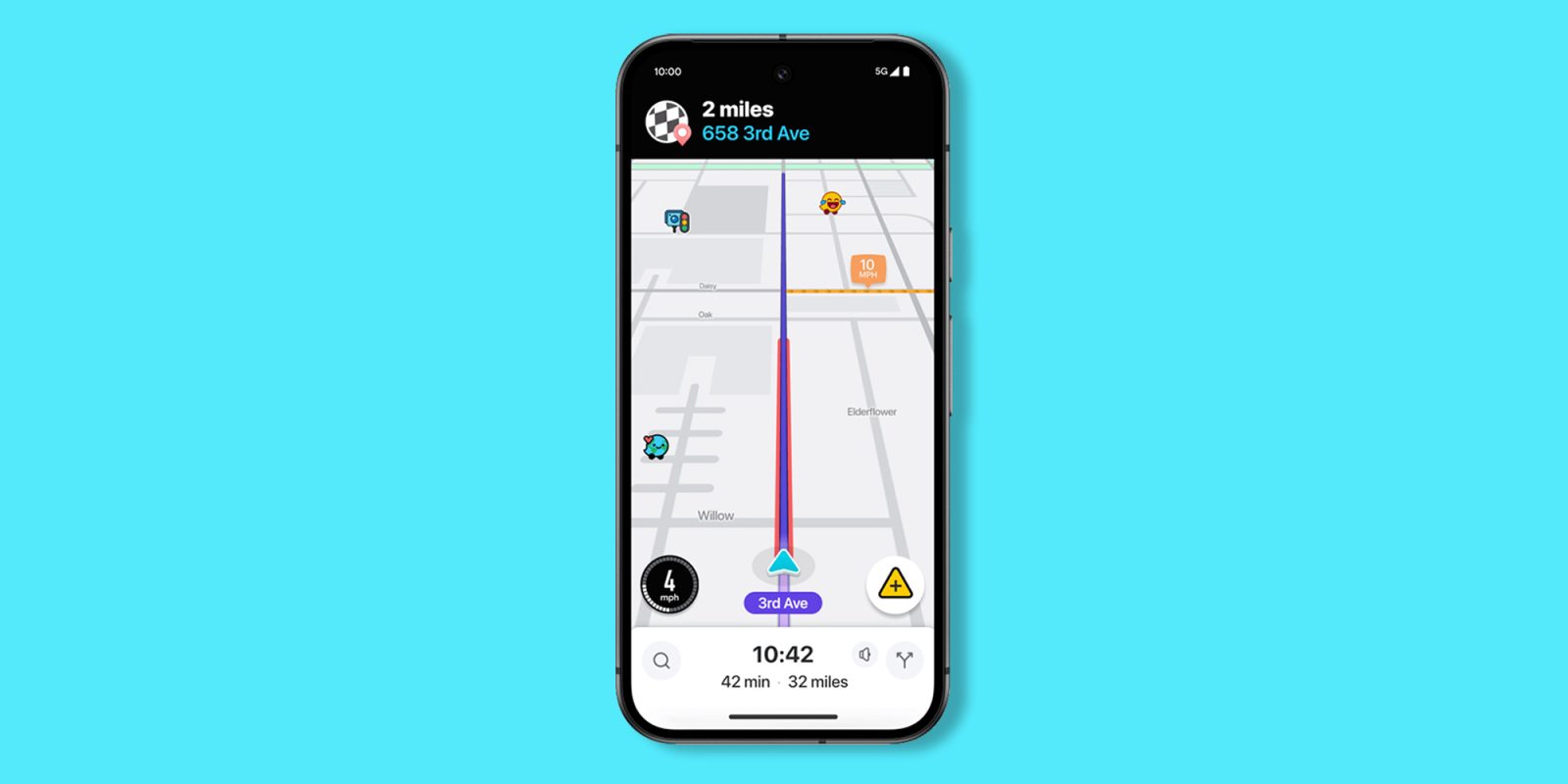

Waze is adding an AI-powered “Conversational Reporting” feature for incidents and obstructions when using the navigation system.

Building upon the crowd-sourced nature of the Waze application, you will soon be able to use voice commands and natural language to effectively tell the navigation system if there is an incident or obstruction while driving – or as a passenger.

Instead of requiring extra screen taps and touches, this enhanced function allows you to focus on the road and describe what you see, such as “There is a mattress in the road” or “There is a traffic jam up ahead.” Waze may ask follow-up questions if more information is required or specific details are needed, such as “Can you describe what you see?”

To initiate, you will need to tap the “reporting” button and then start talking naturally. AI can understand context and natural language so that specific phrases you might forget are not required.

Once accepted, Waze will use the data from “Conversational Reporting” data to populate real-time maps and inform other road users of the obstacle, obstruction, or incident.

Conversational Reporting is being launched in beta on the Waze app for Android and iOS globally. However, it is only available in English. A wider range of language support for the feature is expected in the “coming months.”

Waze is also adding live school zones to maps with the ability for users to edit or alter these areas. When driving near or through a school zone, you will be given extra cues to help ensure the safety of pedestrains and other road users. This feature will begin rolling out on Android and iOS “later this year.”

More on Waze:

- Police officers will now report themselves on Google Maps and Waze in one US state

- New Google Maps incident reporting comes to mobile & Android Auto, Waze gets lockscreen navigation

- Waze adding alerts for speed limit changes, roundabouts, speed bumps, more

FTC: We use income earning auto affiliate links. More.

3 weeks ago

2

3 weeks ago

2

English (US) ·

English (US) ·